Mistral Large vs Claude 3 Opus: Enterprise Showdown

If you work with enterprise AI, you already feel the tension around Mistral Large vs Claude 3 Opus: Enterprise Showdown.

Teams ask real questions now. Not hype questions.

Which model handles sensitive data better. Which one stays stable at scale. Which one breaks under load.

I have spent months testing large language models for business use.

Some tests were clean. Some were messy.

This post comes from that work. Real use, real limits, real trade-offs.

I will walk you through how Mistral Large and Claude 3 Opus behave inside enterprise systems.

No sales talk. No soft claims. Just what matters when real money and data are involved.

Why enterprises compare these two models

Most enterprise AI model comparison talks start with GPT.

But many teams look past it now.

Control, cost, and privacy push buyers elsewhere.

Mistral Large enterprise use keeps coming up in Europe and regulated firms.

Claude 3 Opus enterprise adoption keeps rising in US-based companies.

Both target serious workloads.

This comparison exists because these models solve different pain points.

Some teams need strict data privacy AI rules.

Others need long memory and steady reasoning.

Many need both, which gets tricky fast.

Read more: Qwen 2 vs Gemini 1.5 Pro: Polyglot or Titan?

What enterprises actually need from an AI model

Enterprise needs differ from startup needs.

Speed alone is not enough.

Here is what most teams ask me for:

- Stable answers across long sessions

- Clear handling of private data

- Predictable cost at scale

- Strong reasoning accuracy

- Easy audit trails

Large language models for business fail when they miss these basics.

Quick snapshot comparison

Before details, here is a clean view.

| Feature | Mistral Large | Claude 3 Opus |

|---|---|---|

| Provider | Mistral AI | Anthropic |

| Context length | Very long | Extremely long |

| Deployment | Cloud, on-prem | Cloud only |

| Privacy focus | Strong | Strong |

| Reasoning style | Direct, technical | Careful, verbose |

| Best fit | Regulated firms | Knowledge heavy teams |

This table hides the hard parts though.

Let’s open it up.

Mistral Large enterprise use in real systems

Mistral Large feels built by engineers for engineers.

The answers are short. Sometimes blunt.

That is not a flaw.

In enterprise AI workflows, clarity beats charm.

I have used Mistral Large inside internal tools, code review systems, and support bots.

It stays focused. It does not wander much.

Strengths I see often

- Strong performance in technical tasks

- Clean output for structured prompts

- Flexible deployment options

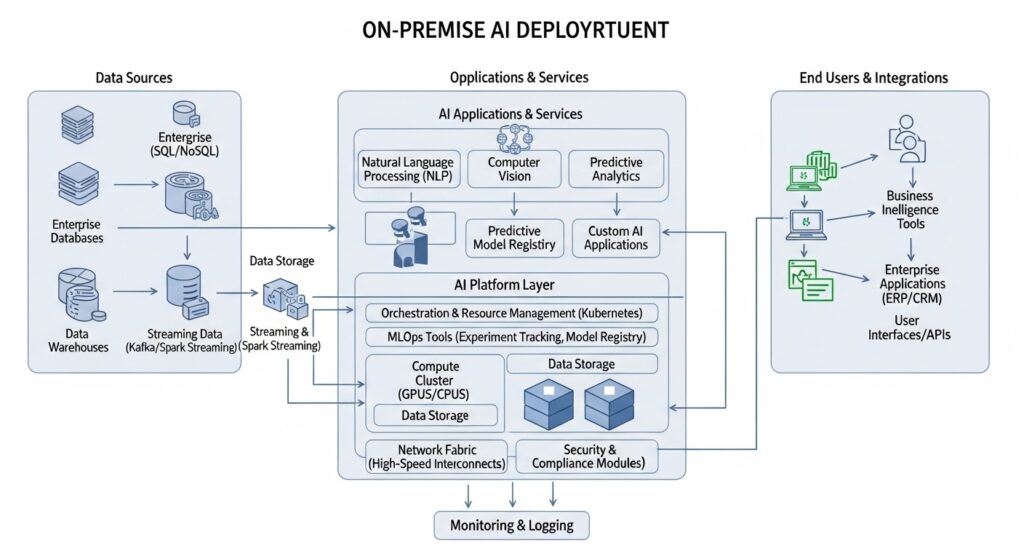

- Works well with on-premise AI deployment

That last point matters more than blogs admit.

Many banks and health firms cannot send data outside their walls.

Mistral meets them where they are.

Limits you should know

Mistral Large is not warm or chatty.

For customer-facing chat, it may feel dry.

It also needs tight prompts.

Loose prompts lead to short or shallow replies.

If your team expects creative tone, you will need tuning.

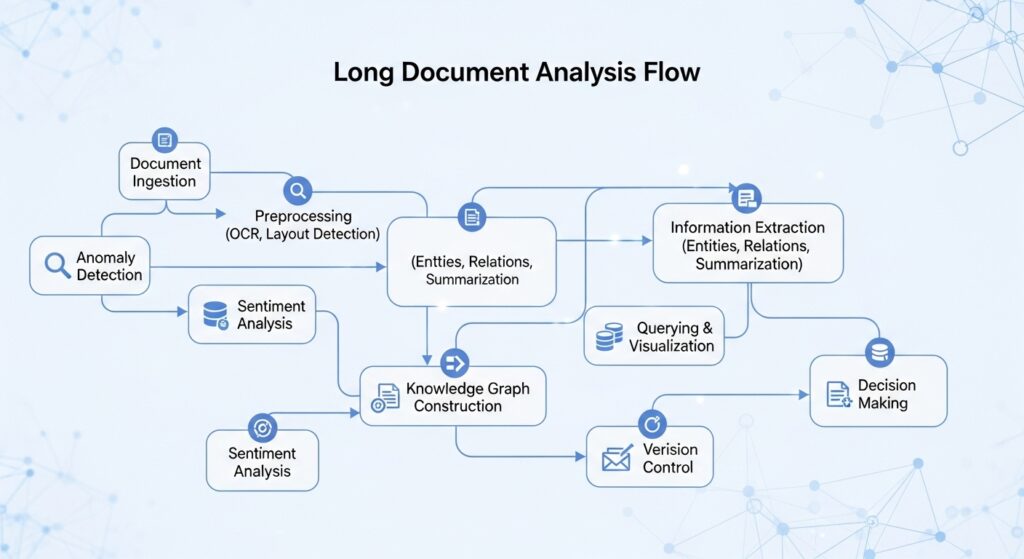

Claude 3 Opus enterprise behavior

Claude 3 Opus feels very different.

It talks like a careful analyst.

Long answers. Clear structure.

Sometimes too careful.

Claude 3 Opus enterprise setups shine in research, policy review, and internal docs.

It reads long files without losing track.

That long context AI ability is real.

I tested with legal docs over 150 pages.

Claude stayed steady.

Where Claude 3 Opus excels

- Long document analysis

- Policy and compliance tasks

- AI reasoning accuracy over time

- AI customer support with empathy

Claude avoids risky answers.

That makes compliance teams calm.

Where Claude struggles

Claude can over explain.

That slows workflows.

It also runs cloud-only.

For firms needing full data control, that blocks adoption.

Cost can also creep up with long prompts.

Data privacy AI and compliance

This is where enterprise buyers pause longest.

Mistral Large allows more control.

On-premise AI deployment changes the risk profile.

Claude 3 Opus depends on strong provider trust.

Anthropic does well here, but control still sits outside.

For AI compliance enterprise needs, ask three questions:

- Where does data live

- Who can access logs

- How audits work

Mistral gives more knobs.

Claude gives more guardrails.

Neither is unsafe.

They just fit different risk models.

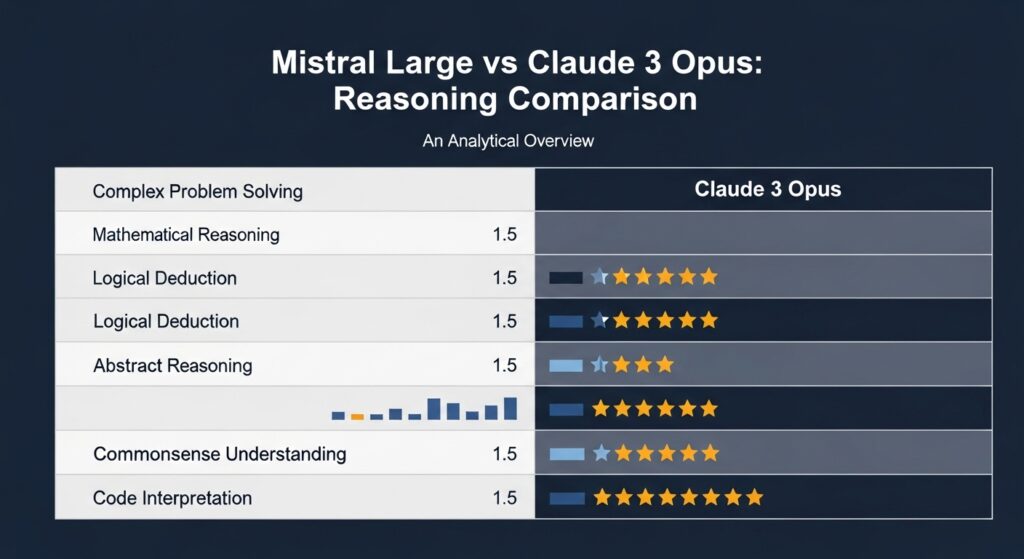

Reasoning accuracy under pressure

AI reasoning accuracy sounds abstract until it breaks.

I ran both models on edge cases.

Incomplete data. Conflicting inputs. Long threads.

Mistral Large stays literal.

It sticks to what is given.

Claude 3 Opus tries to reconcile ideas.

Sometimes it adds extra caution.

Neither hallucinated often in my tests.

Claude was safer. Mistral was faster.

Cost of enterprise AI at scale

Pricing changes often, so I avoid exact numbers.

Patterns matter more.

Mistral Large costs stay predictable with controlled prompts.

On-prem setups cut token fees but add infra cost.

Claude 3 Opus charges climb with long context use.

Heavy document work adds up fast.

Teams forget to model usage growth.

That mistake hurts budgets later.

Always test with real data volume.

AI coding assistant enterprise use

Both models can help developers.

They feel different here too.

Mistral Large works well inside IDE tools.

Fast suggestions. Minimal noise.

Claude 3 Opus explains code deeply.

Great for reviews and learning.

If speed matters, Mistral wins.

If teaching matters, Claude wins.

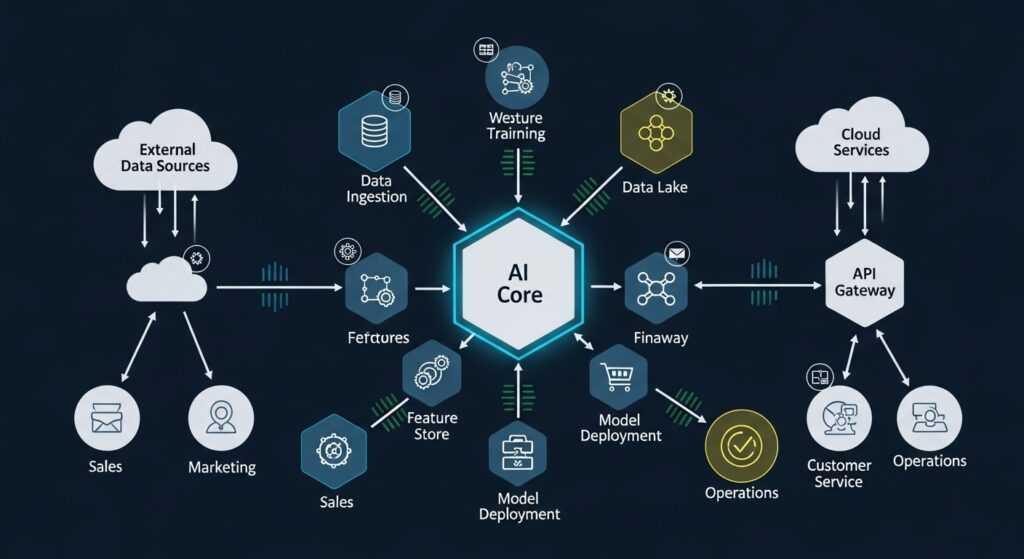

Integration and tooling

Enterprise AI lives inside systems, not chat boxes.

Mistral integrates cleanly with custom stacks.

APIs feel straightforward.

Claude works best with managed pipelines.

Less low-level tuning.

Neither choice is wrong.

It depends on how much control your team wants.

Real decision factors I see in teams

After dozens of calls, patterns repeat.

Teams choose Mistral Large when they need:

- Full data control

- On-prem support

- Technical output

Teams choose Claude 3 Opus when they need:

- Long reading tasks

- Clear explanations

- Strong safety tone

The Mistral Large vs Claude 3 Opus: Enterprise Showdown is not about best model.

It is about best fit.

Common mistakes buyers make

I see these often.

- Picking based on demos

- Ignoring deployment rules

- Underestimating prompt costs

- Skipping security reviews

Enterprise AI punishes shortcuts.

Test in real workflows.

Stress the model.

Then decide.

Updated comparison table

| Area | Mistral Large | Claude 3 Opus |

|---|---|---|

| Best workloads | Tech, ops | Docs, policy |

| Privacy control | High | Medium |

| Context handling | Strong | Very strong |

| Tone | Direct | Careful |

| Setup effort | Higher | Lower |

When hybrid setups make sense

Some firms use both.

Yes, really.

Mistral Large for internal tools.

Claude 3 Opus for document review.

This split reduces risk.

It also raises cost and complexity.

Only large teams should try it.

Final thoughts, not a conclusion

I have no favorite here.

Tools are tools.

The Mistral Large vs Claude 3 Opus: Enterprise Showdown keeps shifting as models update.

What matters stays steady.

Know your data.

Know your risk.

Know your workflows.

Everything else follows.

FAQs

What is the main difference between Mistral Large and Claude 3 Opus?

Mistral focuses on control and deployment. Claude focuses on safety and long context.

Which model is better for enterprise compliance?

Mistral offers more control. Claude offers stronger safety behavior.

Can Mistral Large run on-premise?

Yes. That is a key reason regulated firms choose it.

Is Claude 3 Opus good for long documents?

Yes. It handles very long files better than most models.

Which model costs less at scale?

It depends on usage. Long prompts raise Claude costs faster.